TeleGam: Combining Visualization and Verbalization for Interpretable Machine Learning

Abstract

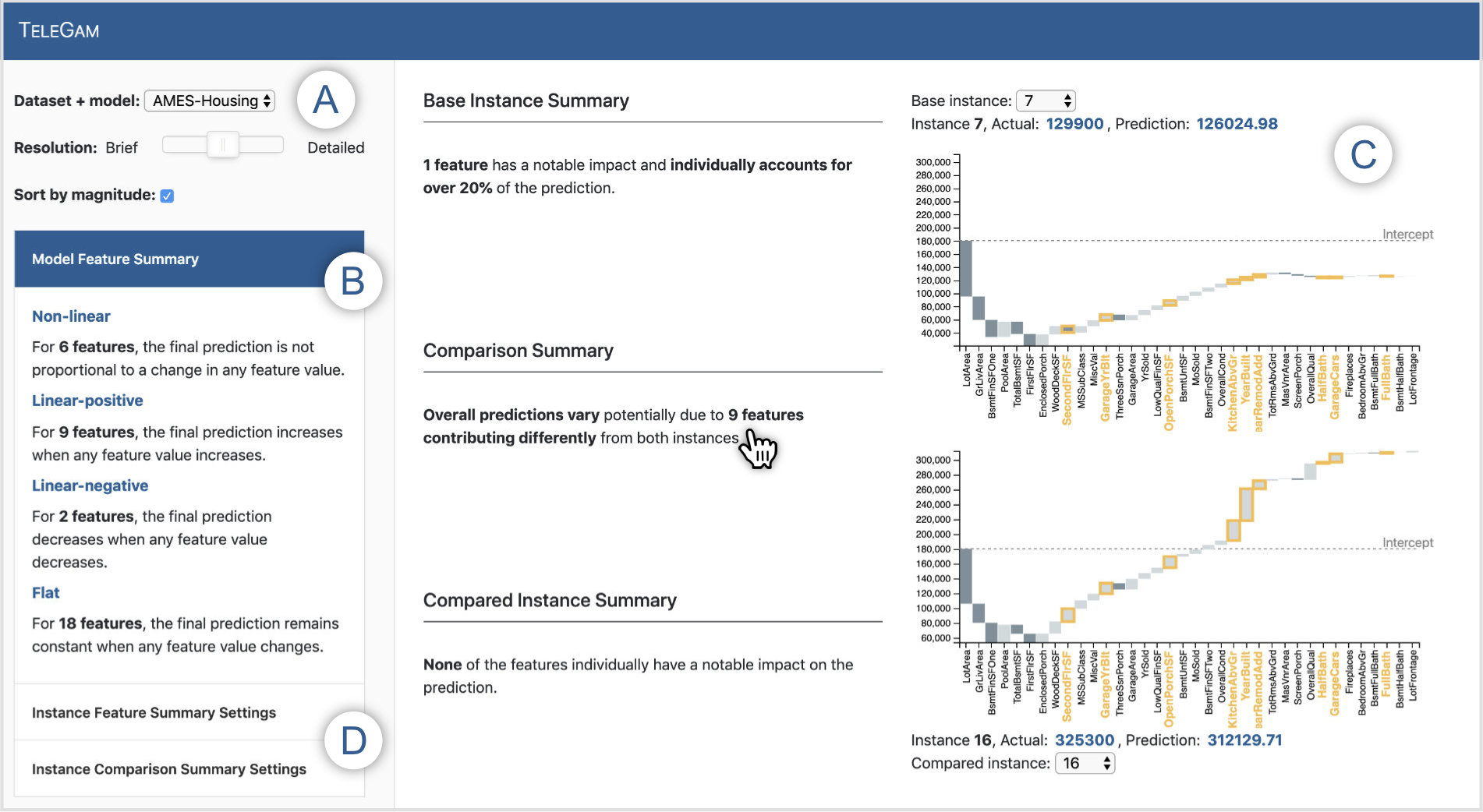

While machine learning (ML) continues to find success in solving previously-thought hard problems, interpreting and exploring ML models remains challenging. Recent work has shown that visualizations are a powerful tool to aid debugging, analyzing, and interpreting ML models. However, depending on the complexity of the model (e.g., number of features), interpreting these visualizations can be difficult and may require additional expertise. Alternatively, textual descriptions, or verbalizations, can be a simple, yet effective way to communicate or summarize key aspects about a model, such as the overall trend in a model’s predictions or comparisons between pairs of data instances. With the potential benefits of visualizations and verbalizations in mind, we explore how the two can be combined to aid ML interpretability. Specifically, we present a prototype system, TeleGam, that demonstrates how visualizations and verbalizations can collectively support interactive exploration of ML models, for example, generalized additive models (GAMs). We describe TeleGam’s interface and underlying heuristics to generate the verbalizations. We conclude by discussing how TeleGam can serve as a platform to conduct future studies for understanding user expectations and designing novel interfaces for interpretable ML.

BibTeX

@article{hohman2019telegam,

title={TeleGam: Combining Visualization and Verbalization for Interpretable Machine Learning},

author={Hohman, Fred and Srinivasan, Arjun and Drucker, Steven M.},

journal={IEEE Visualization Conference},

year={2019},

publisher={IEEE},

doir={10.1109/VISUAL.2019.8933695},

url={https://poloclub.github.io/telegam/}

}