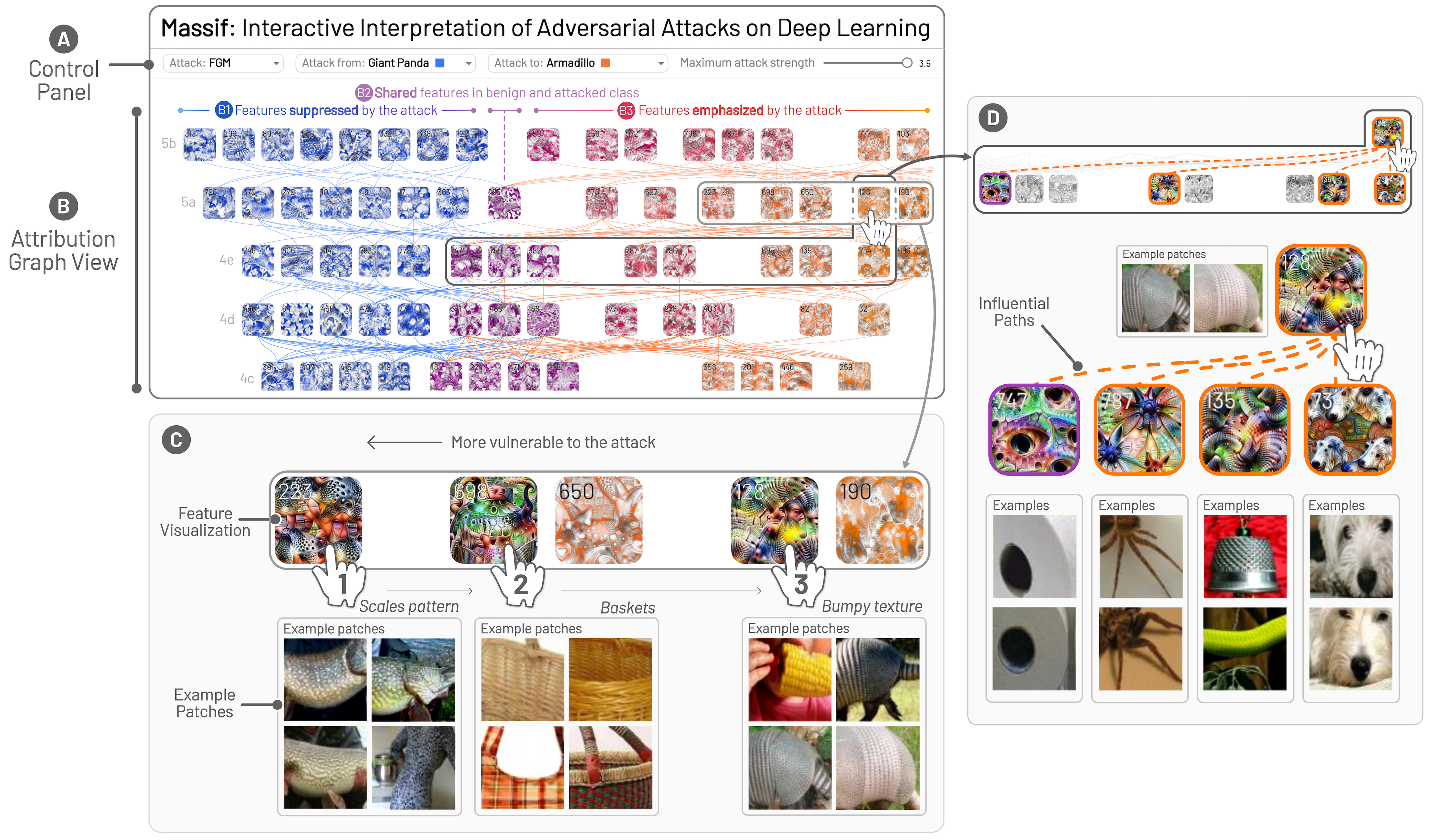

Massif: Interactive Interpretation of Adversarial Attacks on Deep Learning

Abstract

Deep neural networks (DNNs) are increasingly powering high-stakes applications such as autonomous cars and healthcare; however, DNNs are often treated as “black boxes” in such applications. Recent research has also revealed that DNNs are highly vulnerable to adversarial attacks, raising serious concerns over deploying DNNs in the real world. To overcome these deficiencies, we are developing Massif, an interactive tool for deciphering adversarial attacks. Massif identifies and interactively visualizes neurons and their connections inside a DNN that are strongly activated or suppressed by an adversarial attack. Massif provides both a high-level, interpretable overview of the effect of an attack on a DNN, and a low-level, detailed description of the affected neurons. These tightly coupled views in Massif help people better understand which input features are most vulnerable or important for correct predictions.

BibTeX

@inproceedings{das2020massif,

title={Massif: Interactive Interpretation of Adversarial Attacks on Deep Learning},

author={Wang, Zijie J. and Turko, Robert and Shaikh, Omar and Park, Haekyu and Das, Nilaksh and Hohman, Fred and Kahng, Minsuk and Chau, Duen Horng (Polo)},

booktitle={Proceedings of the 2020 CHI Conference Extended Abstracts on Human Factors in Computing Systems},

publisher={ACM},

year={2020},

doi={10.1145/3334480.3382977}

}