Keeping the Bad Guys Out: Protecting and Vaccinating Deep Learning with JPEG Compression

Abstract

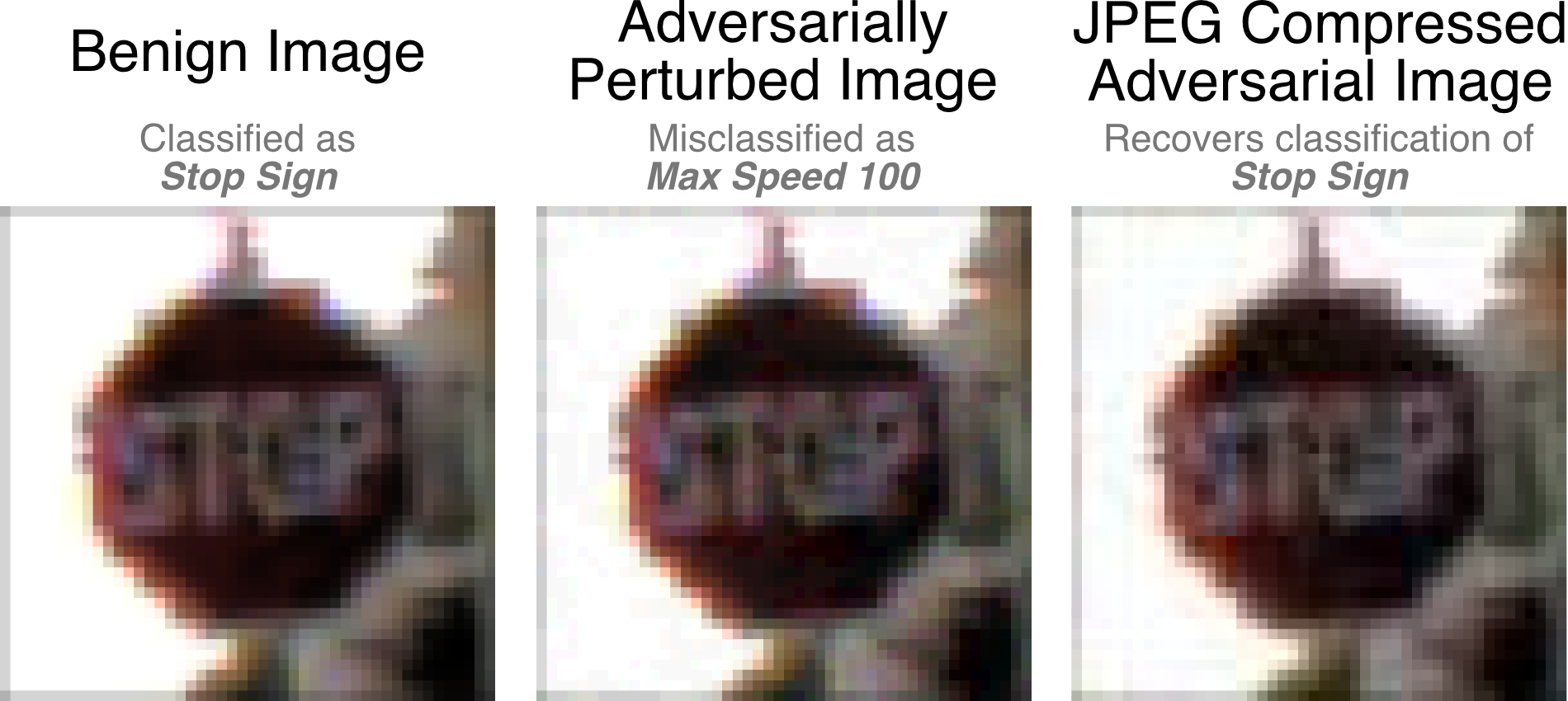

Deep neural networks (DNNs) have achieved great success in solving a variety of machine learning (ML) problems, especially in the domain of image recognition. However, recent research showed that DNNs can be highly vulnerable to adversarially generated instances, which look seemingly normal to human observers, but completely confuse DNNs. These adversarial samples are crafted by adding small perturbations to normal, benign images. Such perturbations, while imperceptible to the human eye, are picked up by DNNs and cause them to misclassify the manipulated instances with high confidence. In this work, we explore and demonstrate how systematic JPEG compression can work as an effective pre-processing step in the classification pipeline to counter adversarial attacks and dramatically reduce their effects (e.g., Fast Gradient Sign Method, DeepFool). An important component of JPEG compression is its ability to remove high frequency signal components, inside square blocks of an image. Such an operation is equivalent to selective blurring of the image, helping remove additive perturbations. Further, we propose an ensemble-based technique that can be constructed quickly from a given well-performing DNN, and empirically show how such an ensemble that leverages JPEG compression can protect a model from multiple types of adversarial attacks, without requiring knowledge about the model.

BibTeX

@article{das2017keeping,

title={Keeping the Bad Guys Out: Protecting and Vaccinating Deep Learning with JPEG Compression},

author={Das, Nilaksh and Shanbhogue, Madhuri and Chen, Shang-Tse and Hohman, Fred and Chen, Li and Kounavis, Michael E and Chau, Duen Horng},

journal={arXiv preprint arXiv:1705.02900},

year={2017}

}