Bluff: Interactively Deciphering Adversarial Attacks on Deep Neural Networks

Abstract

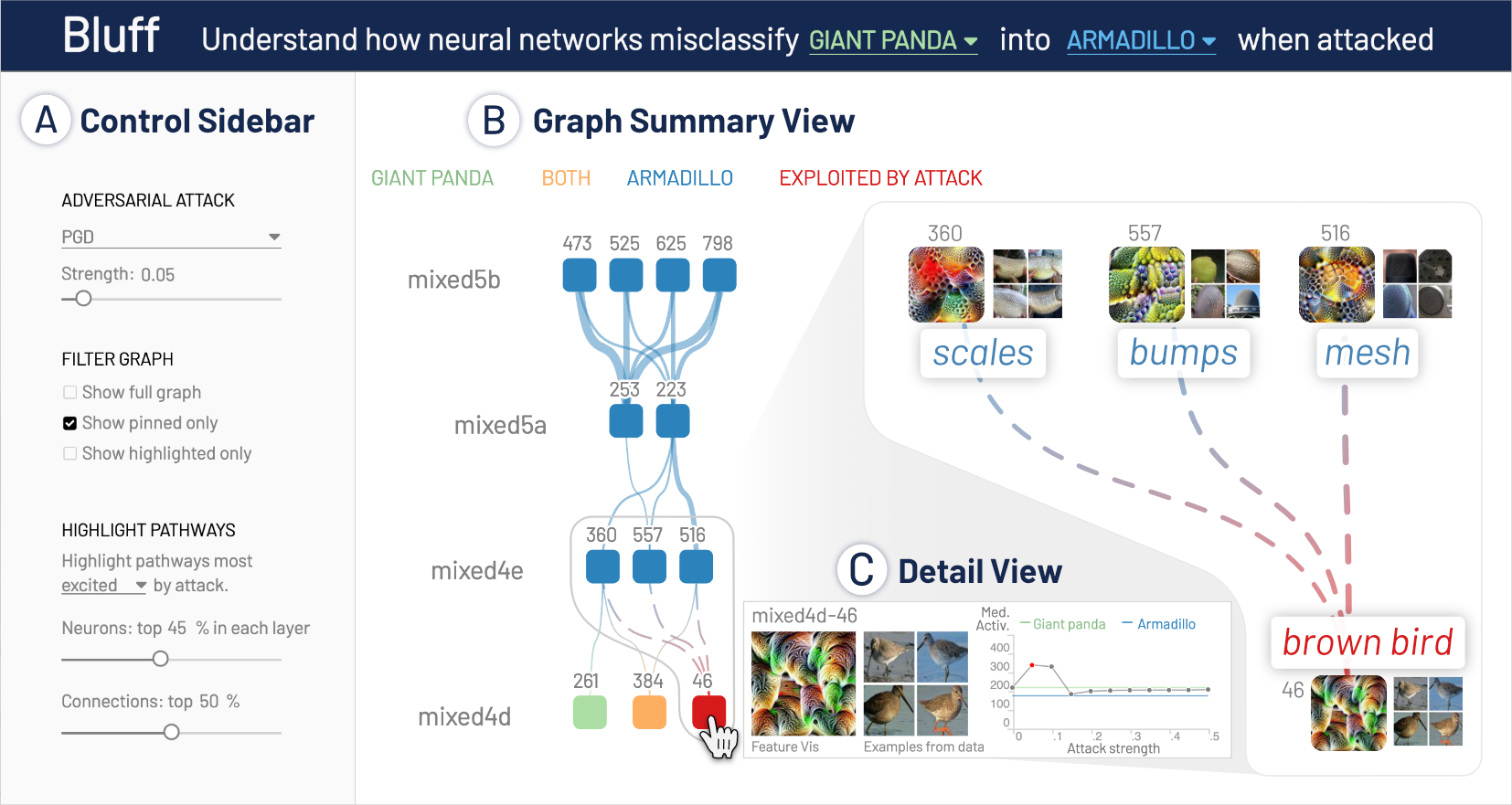

Deep neural networks (DNNs) are now commonly used in many domains. However, they are vulnerable to adversarial attacks: carefully-crafted perturbations on data inputs that can fool a model into making incorrect predictions. Despite significant research on developing DNN attack and defense techniques, people still lack an understanding of how such attacks penetrate a model’s internals. We present Bluff, an interactive system for visualizing, characterizing, and deciphering adversarial attacks on vision-based neural networks. Bluff allows people to flexibly visualize and compare the activation pathways for benign and attacked images, revealing mechanisms that adversarial attacks employ to inflict harm on a model. Bluff is open-sourced and runs in modern web browsers.

BibTeX

@inproceedings{das2020bluff,

title={Bluff: Interactively Deciphering Adversarial Attacks on Deep Neural Networks},

author={Wang, Zijie J. and Turko, Robert and Shaikh, Omar and Park, Haekyu and Das, Nilaksh and Hohman, Fred and Kahng, Minsuk and Chau, Duen Horng (Polo)},

booktitle={IEEE Visualization Conference (VIS)},

publisher={IEEE},

year={2020},

doi={10.1109/VIS47514.2020.00061},

url={https://github.com/poloclub/bluff}

}